Al Knitwear

Photo credit: @anthonyespinostudio

Post-graduate Fellowship project

Time: July 2019 - Dec 2019

Part of the summer research project SAMPLER led by Elise Co (The earlier AI pattern research part)

Responsibility and Workflow:

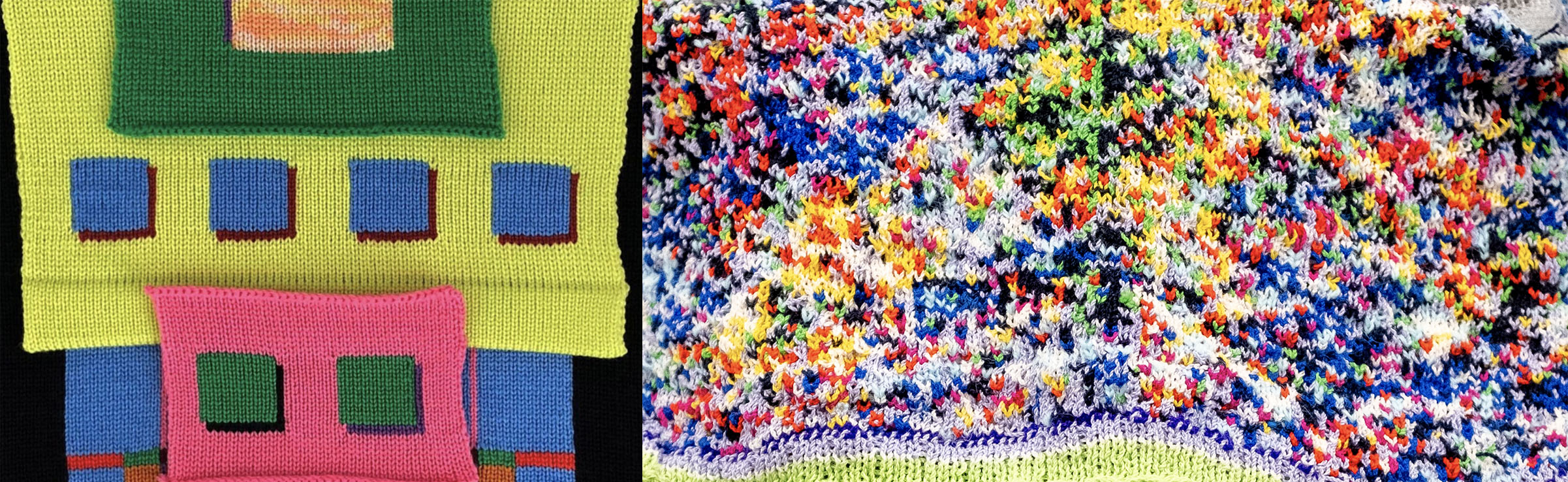

- Concept development : Created a series of knitwear that encodes algorithmic messages generated by machine learning to illustrate a brand new knitted fashion aesthetic.

- Research : Researched and experimented on different machine learning algorithm to identify the most suitable one; researched on the current mechanism and limitations of machine knitting, speculated the direction of future of knitting.

- Coding and prototyping : Integrated generative machine learning algorithms on custom knitting pattern datasets; built a program to facilitate this new way of knitting.

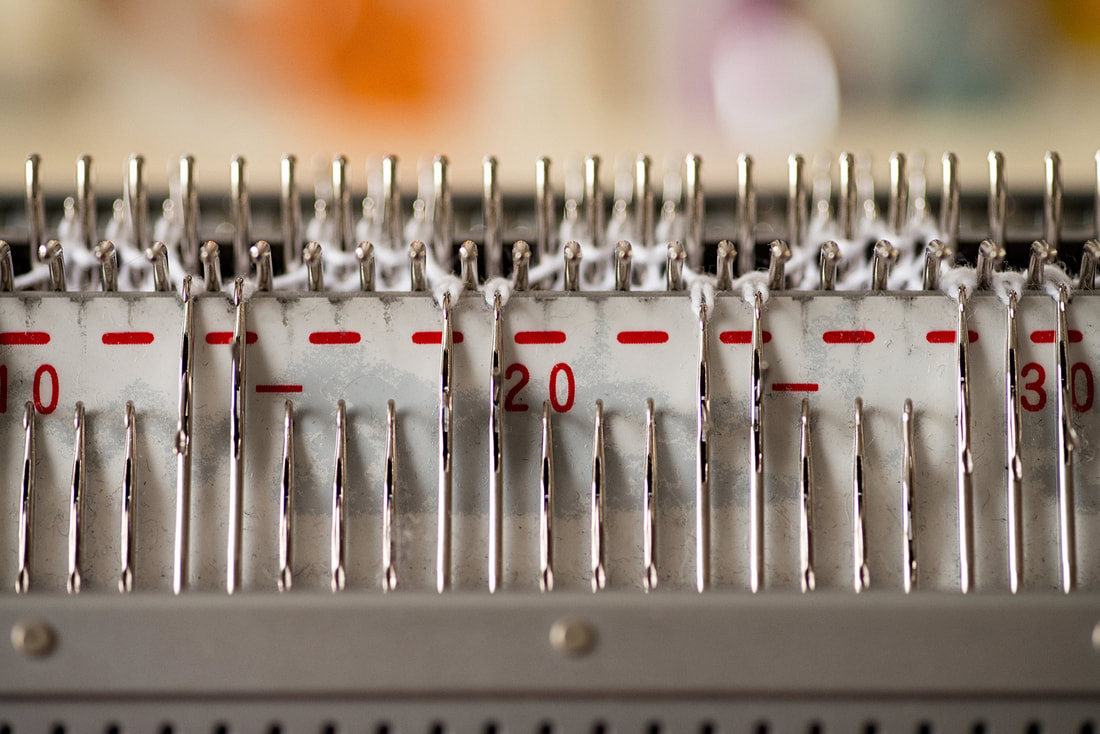

- Making : Knitted the swatches on Brother KH940, Brother Bulky both single beds and double beds using different techniques. Used the swatch to make a finished garment.

- Post-production : Photography and branding for dissemination and documentation of the AI knitwear fashion line.

INTRO

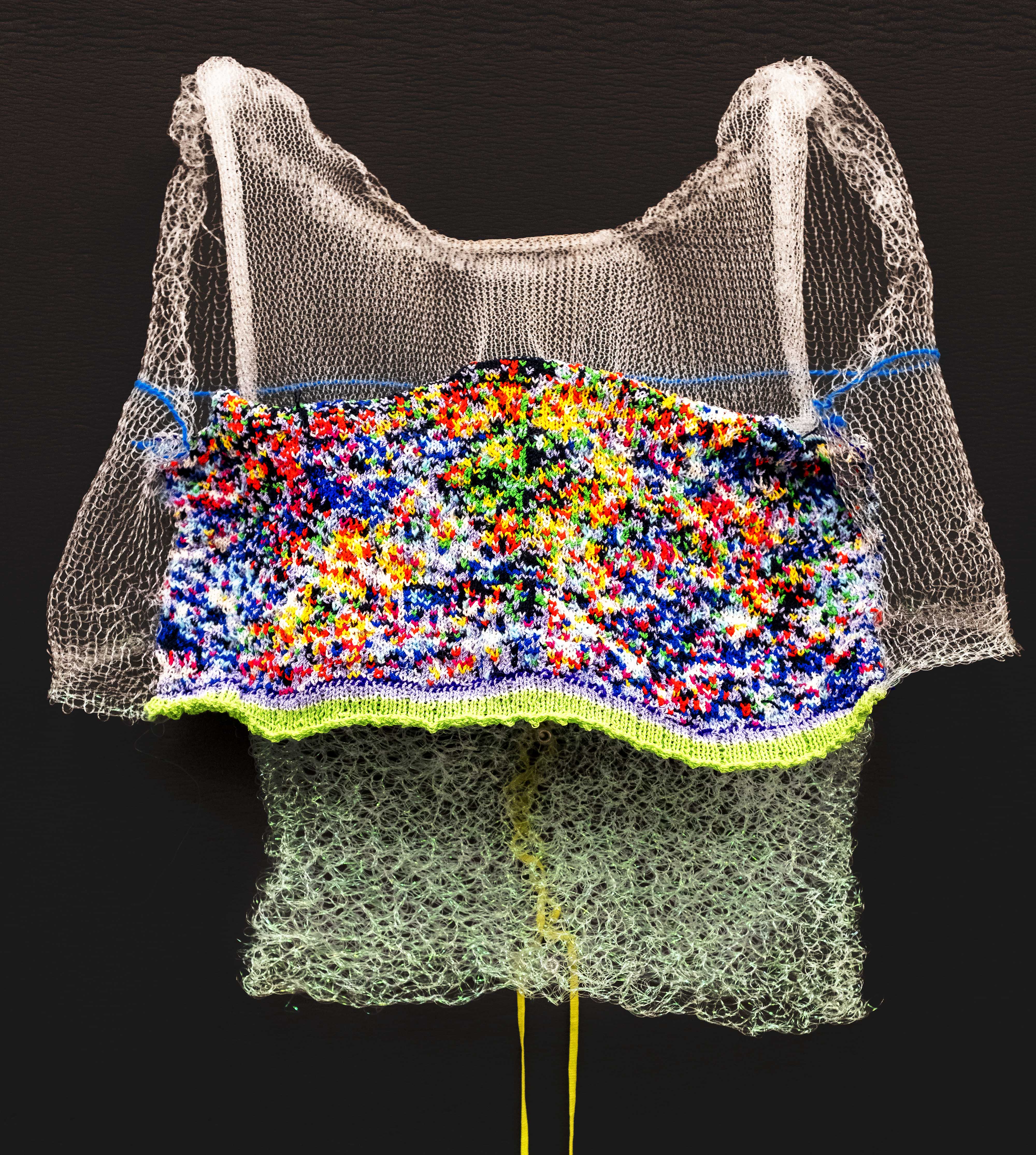

AI knitwear explores the possibility of integrating machine learning algorithms with traditional knitting techniques by examining the impact of a machine generated patterns and aesthetics on our everyday wearables – garments. Due to the glitchy nature of the AI generative patterns, the project is a challenge to current knitting techniques in both mechanisms and aesthetics.

BACKGROUND & RESEARCH

1. Knitting Machines:How a knitting machine looks like and how it produces fabric(you can add a motor to the carriage or hand push it to knit):

Limitations of the current home knitting machine:

a. Width: Limited to the width of the knitting machine bed.

b. MultiColor:Limited to 2 colors for single bed knitting, 4 colors for double bed knitting. For home knitting machines even with a color changer integrated 4 color is usually the maximum. The more color that is used the more floats in the back which results in an uneven surface for single bed fabric, or a more stiff surface for double bed fabric, which is bad for a fabric.

c. Customized pattern: Not really accessible; it takes lots of time and effort, or money.

d. Intarsia: A technique used to knit multi-color pattern work. However, it can only apply a block of color instead of single scattered pixels of color. (see image below).

e. Open-source knitting machine hacking programs(e.g. AYAB): only support up to 6 colors for one pattern and with substandard performance for knitting 6 colors.

Opportunities for innovation:

a. A multicolor changer that supports unlimited number of colors (may need disassemble and reassemble).

b. A program that connects with the machine and supports unlimited number of colors.

c. A machine/ carriage that knits scattered multicolor work without tons of floats at the back (single bed) and/or makes the fabric very stretchy or stiff (double bed).

2. Knitting patterns &AI algorithms:

what a knitting pattern chart looks like:

A knitting pattern chart is simply pixel colors on a grid. One square represents one stitch on the swatch. If the swatch is bigger than the dimension of the chart, for example, the swatch is 100 needles wide by 100 rows, but the pattern chart is 10 by 10, the pattern will repeat itself 10 times per row. With the pattern chart, a knitter can knit the charts with multicolor or a structural pattern such as lace.

Example (images from Internet):

2-color checkerboard swatch V.S. checkerboard lace pattern swatch

From the example above, we can see that using different knitting techniques, one pattern chart can produce very different fabrics. This is one of the things that I am fascinated by knitting, it’s like computer graphics and after effects, but instead of digital post-production, this is a physical post-production. With different combination of yarns, gauge, technique, single or double side fabric, you have the ability to create unlimited physical effects on the same digital image.

After I spent days collecting various knitting pattern charts for my AI training dataset, I built a fundamental understanding of those patterns and started to categorize them.

︎Traditional patterns

︎Figurative patterns

︎Geometric patterns

︎Abstract patterns

Traditional patterns are usually those we see often on a sweater from the 90s while figurative and geometric patterns are very much self-explanatory. I found abstract patterns are the most interesting and have the most potential to be surprising, because they are usually unexpected. Like machine learning algorithms you never know what features the algorithm decides to pick up and why they “think” the result it generates is as real as the human generated one.

After gaining enough knowledge and hands-on knitting experience, I am moved to pattern generation, analysis, design, and final product production.

ALGORITHM & GENERATIVE PATTERNS

For knitting pattern generation, I used an algorithm called GAN(Generative Adversarial Network). The algorithm learns the feature like color, texture, composition of the input images, mimic it, then output a set of images that it thinks is as real as the real input. I experimented with 3 different GAN algorithms and 5 different datasets, here are some results generated by algorithms:

1. Trained with multi-colored pattern charts

2. Trained with only black and white pattern charts

It’s easy to see that the second set of result is more “successful” than the first set. The first set is trying to get the gridline, structure, and color while the second set has clear gridlines and pixel blocks. This is because the second set has more “clean” data to learn from. It requires more images and time for the machine to learn if it’s a colored dataset. However, what caught my eye is the first set of result. The intermediate generative result or the one consider “collapse” is a perfect representation of how the machine neural networks is wired, the machine generative aesthetic. In fact, I got some similar results in my last project working with other GAN models, but these glitchy color only shows in part of the image instead of the whole image. Therefore, I want to learn more about these machine glitchy results, the message encrypted in it and its implication to us: knit these glitchy images out, make them into garments/wearables, use, wear, and test them in real life context.

MAKING

︎

PRE-PRODUCTION

Work with algorithms to generate desired patterns:

︎Choose machine learning algorithm model

︎Prepare dataset

︎Training

︎Test the result

︎

PRODUCTION

Adjust the generative result to make it suitable for machine knitting:

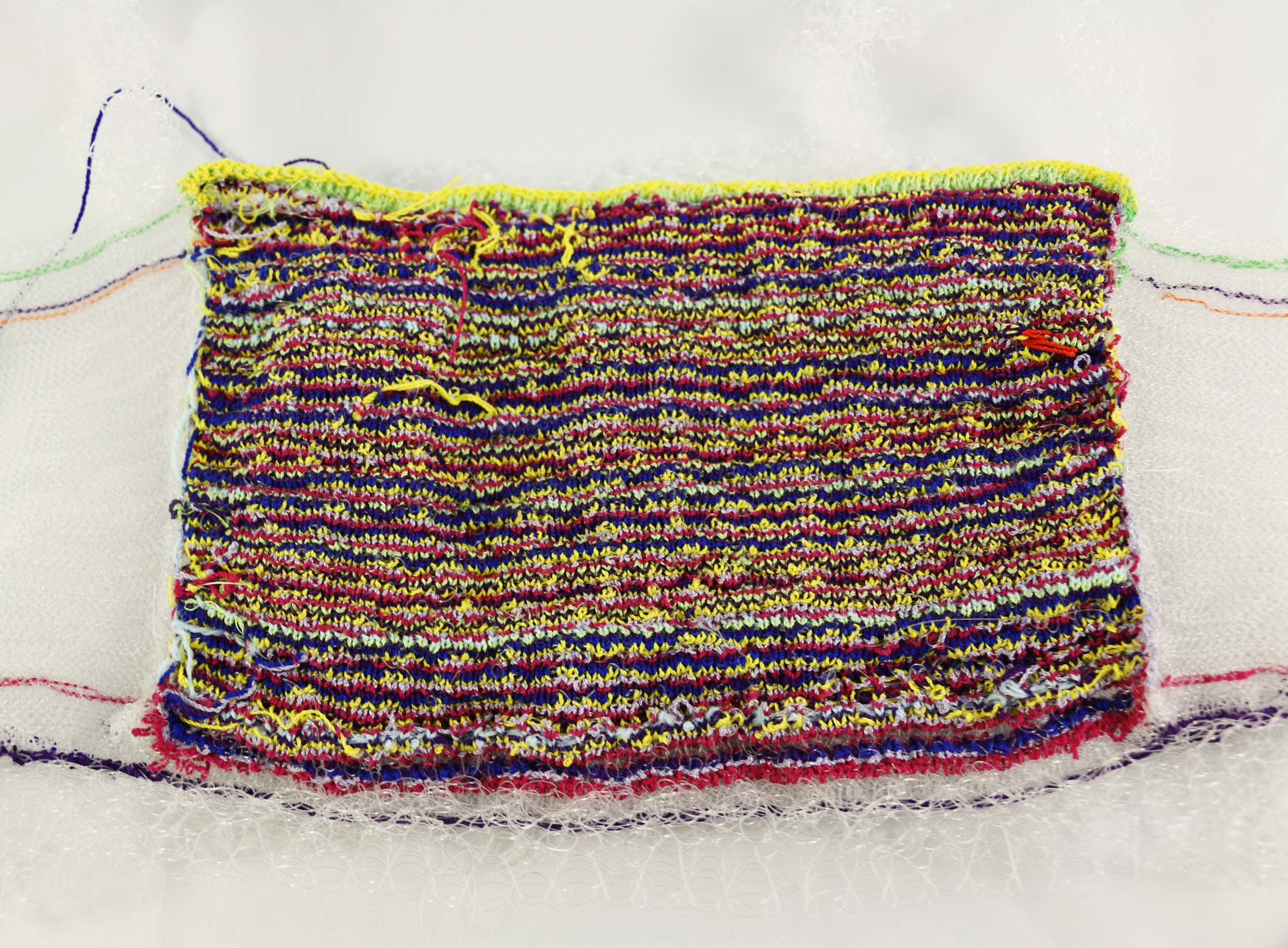

︎Down-sample the result:Due to the limitation of multi-color knitting(usually 2-4 color for machine knitting), I down-sampled the pattern to 10 colors to start with(the least number of colors that still retains the original glitchy aesthetic).

Left(generative result) V.S Right(down-sampled image)

![]()

![]()

![]()

![]()

︎Replace color based on yarn choice:

Based on colors of the existing yarns, replace certain colors on the image to simulate the final product color palette, for example, replace grey with light purple.

︎Make it “machine readable”:

Since there are no free or even low cost program that supports 10 color pattern for the machine, I have to figure out a way to make the knitting process less painful. I wrote a simple program in Processing to make it easier for me to translate the pattern from the computer to the knitting machine:

Demo of the program:![]()

The program separates color for the pattern and indicates the row and needle position for each pixel, which saves me lots of time on counting and memorizing the pattern.

︎

POST-PRODUCTION

To knit these three pieces I showed in the beginning of this document, I used three different techniques, they all are traditional knitting techniques, but because of the glitchy pattern and number of colors I am using, I had to do it differently:

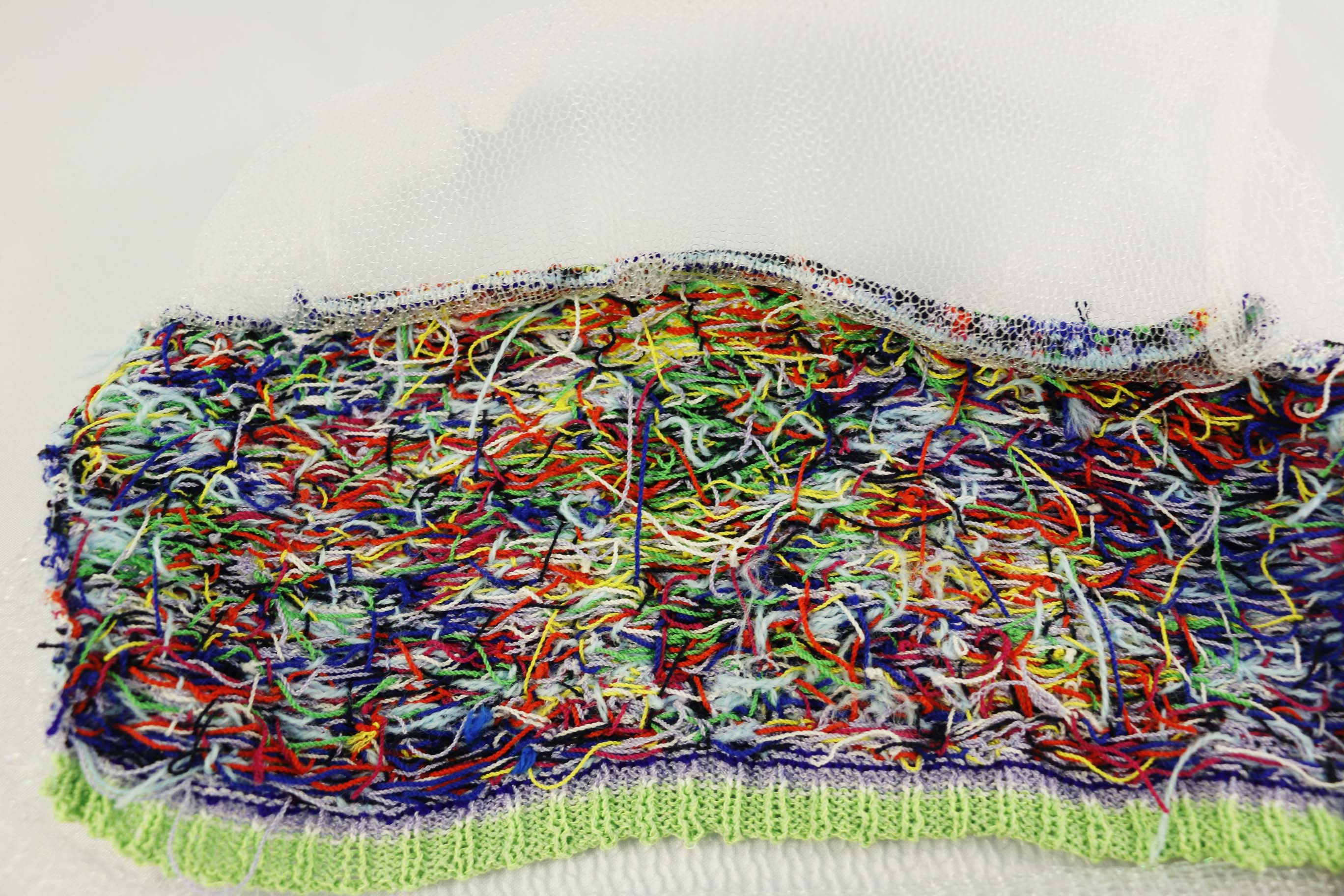

1. Single-bed fairisle ︎ All hand manipulated, caused uneven tension surface. Long floats at the back

2. Single-bed intarsia ︎ Semi-hand manipulated, a more even surface than the first one. Long floats at the back, less than the first one tho.

3. Double-bed jacquard ︎ Machine knit. No floats. Among these three technique, I prefer multicolor intarsia than the other two. Using intarsia is a good way to avoid uneven tension when hand manipulation is needed. Though double-bed jacquard solves this problem and the float problem, because too many colors were used, the pattern is elongated and you can see the back side from the front.

The video below documents the knitting process of the second piece, using single-bed intarsia.

Technique and equipment : Single bed 9-color intarsia on both Brother standard and bulky machine

Time: 40 hours of knitting + 10 hours of sewing

Internet of Knitted wearables

BRANDING

Coming soon......

SPECULATION & REFLECTION